The PS3 was a ridiculous machine

June 13, 2024

[This article is an adaptation of the video embedded above.]

This is a supercomputer

The special part about this machine is that it was made out of the Playstation 3. Yes, the gaming console.

It utilized not just tens, or hundreds, but a total of 1,760 consoles. Racks upon racks of PS3s were connected together and with the help of about a hundred coordinator servers, it became the 33rd fastest supercomputer by the time it was completed. This project was named the “Condor Cluster”.

The PS3 super computer

Now, the Condor Cluster was definitely not a gimmick. There is a very good reason for using the PlayStation 3s as a super computer. And that reason is: Economics.

Economics of the PS3s

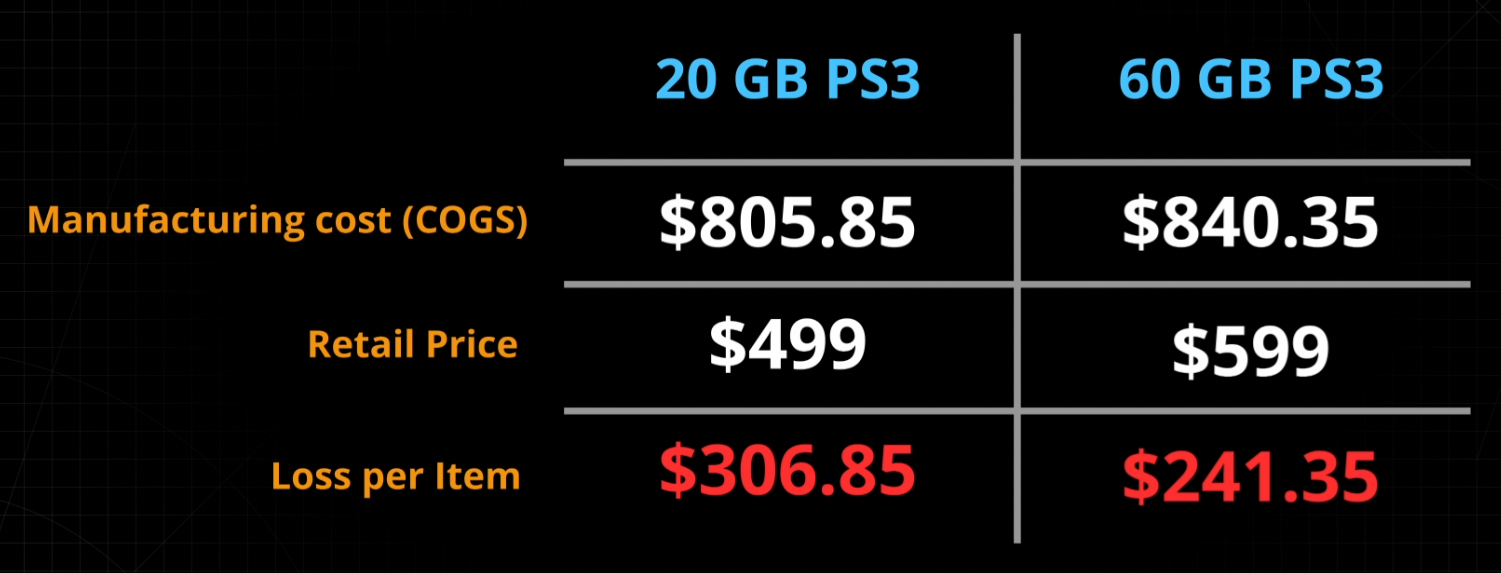

You see, the PlayStation 3 were sold at a loss. Based on some estimations, Sony lost around 200 to 300 dollars depending on model sold. It costed Sony $800 to manufacture one machine, but in 2006, anybody can go to the store and get a brand new PlayStation 3 for only $500.

This was not a mistake. On the contrary, this was a strategic plan to get more profit in the long run. Sony plans to offset their hardware losses through selling games and subscriptions plans like the PSN. Lowering the hardware cost allows for more people to purchase their console, which in turn increases sales of games and other services.

Financial Success

The PS3 itself was not particularly successful financially. It took Sony 4 years before they started having a positive operating income in their games department. Even then, it would take even longer to earn back their prior investment.

Compared to their new consoles, the PS4 took only 6 months before it became profitable. While the PS5, well it took around a year.

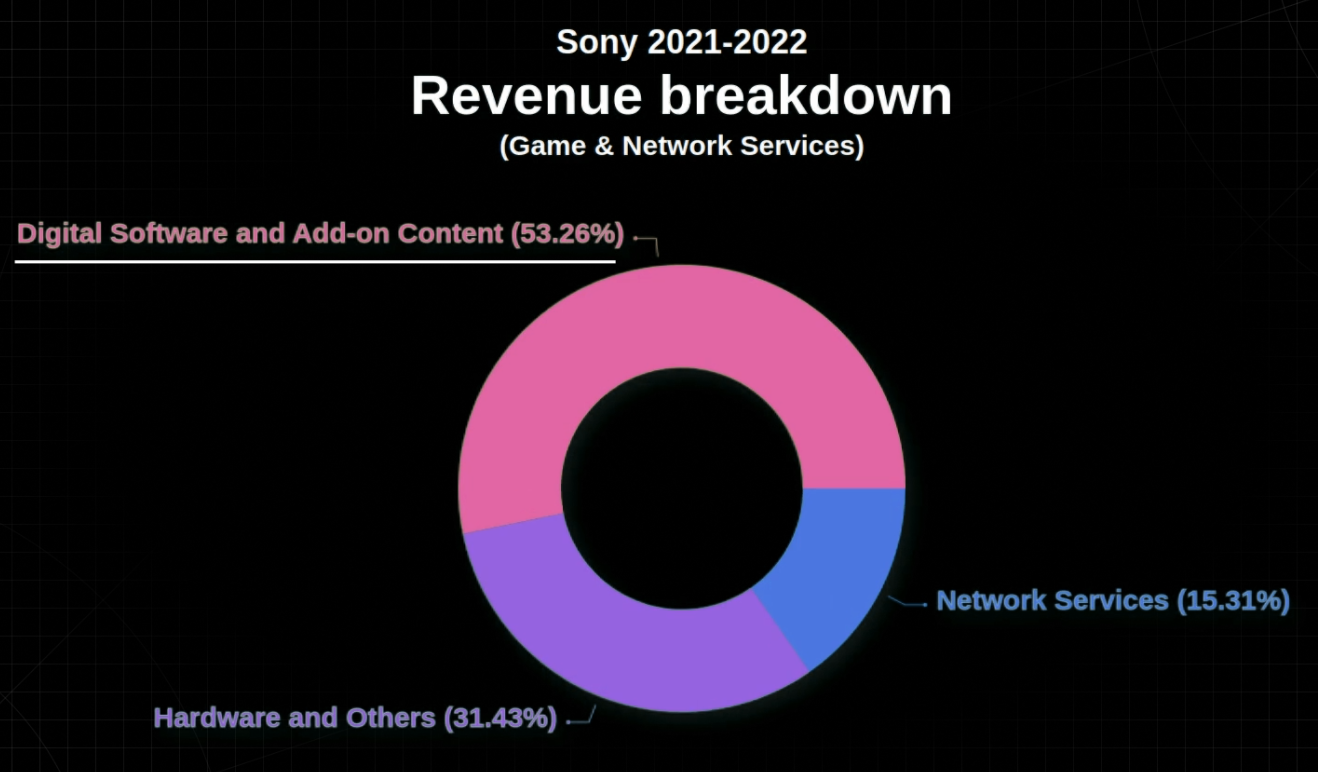

In their 2021 financial report, Sony reported a total revenue of 2 and a half Trillion Yen. From that total, 53% were from their “Digital Software and Add-on Content“ category. This was games bought from the PlayStation store, along with any in-game transactions.

Selling at a loss as a strategy for gaming consoles

Now, Sony is certainly not unique in this regard. Almost all gaming consoles are sold at a loss on their release date. This strategy is especially important when the console in question has a strong competitor.

Just recently, Valve Software released the Steam deck, a competitor of the Nintendo Switch. In an interview, Gabe Newell, the president of Valve says that the aggressive pricing of the Steam Deck is a long-term strategy in order to “establish a product category”.

But if most gaming consoles are sold at a loss, then what is so special about the PS3 that it was used to build a supercomputer?

The answer to that is the unique architecture of the PS3.

CPU & GPU

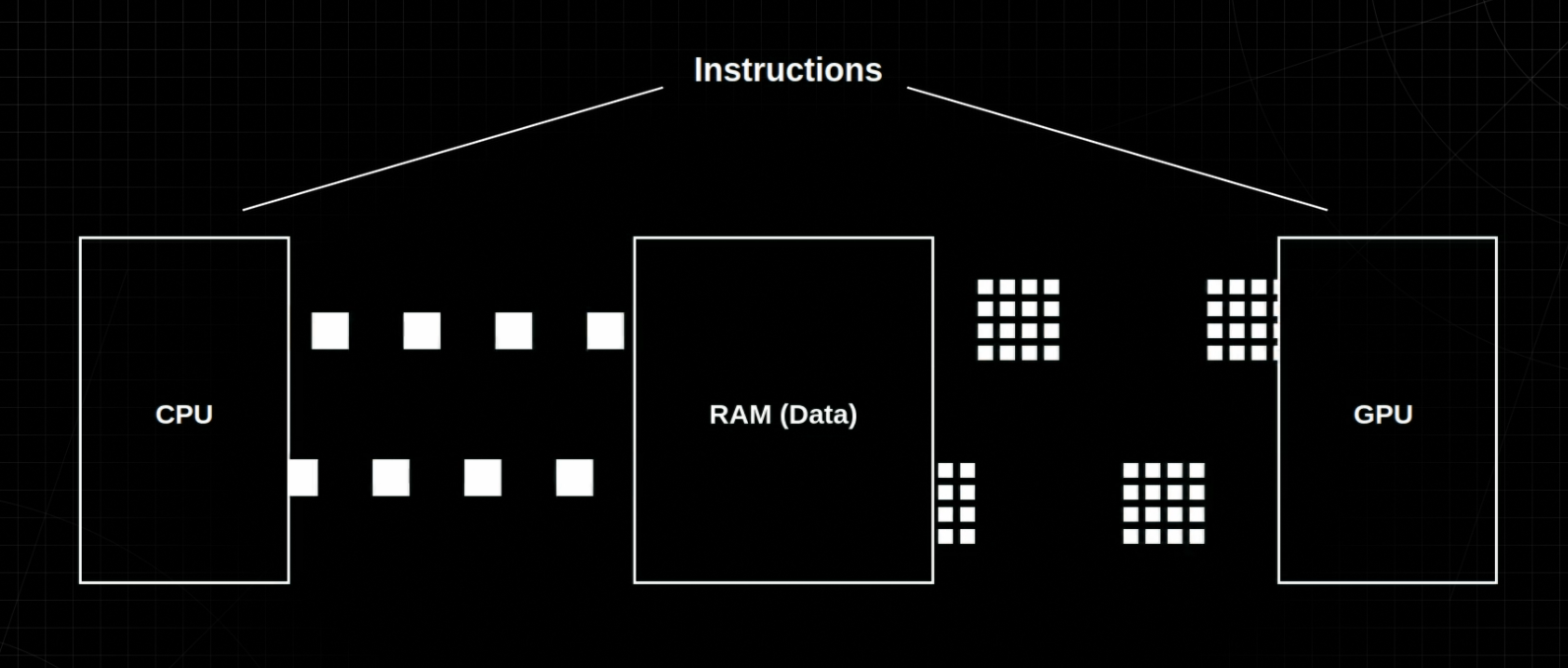

You see, computers as we know it today are typically built from several replaceable components. At the core of it is the CPU. The Central Processing Unit. This is the brain of the system. It processes all the computation that needs to be done within a computer.

Today, most modern computers are also equipped with what is called the GPU. The Graphics Processing Unit. Like the name implies, a GPU is very similar to the CPU. The difference is that GPU is optimized to process graphic calculations very very well.

The CPU takes an instruction, processes it, and outputs something to the system. And it can do this extremely quickly. However, it can only process the instructions one at a time.

In contrast, the GPU is like a group of smaller, less powerful CPUs. While not very fast, it can process a lot more data in one go. This makes it very efficient at processing graphics workloads since a display is composed of a ton of data points.

It's like using a shotgun as compared to a rifle. They fulfill a similar role but does it in completely different ways.

Rise of CPU parallelization

Back in the early 2000s, this separation between the CPU and GPU was not as pronounced as it is today. During that period, CPU’s were mostly single cores. Year after year, a lot of effort were invested to make them run faster. This was primarily in the form of making them clock faster, denoted by the frequency in its product specification. A higher number typically means a faster CPU.

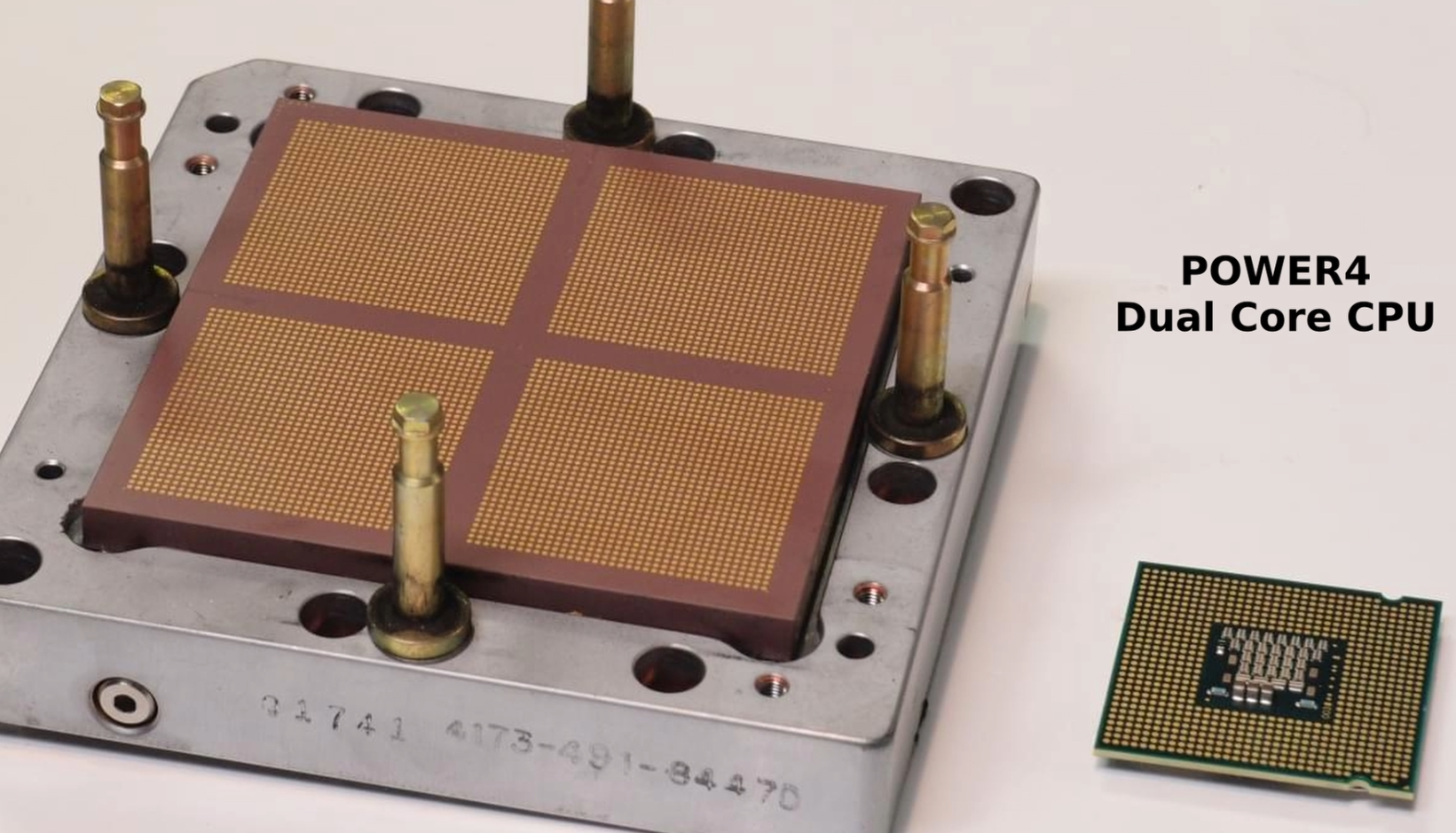

However, as CPUs became smaller than ever, it became increasingly harder to increase its clock speed. Realizing this, manufacturers started to focus on running instructions in parallel. Instead of one CPU, why not pack two of them inside of one CPU. This took the form of what we call the CPU cores.

In 2001, IBM released the first dual-core CPU. With this CPU, programmers can run 2 instructions at the exact same time. This opened up a whole new world of possible performance improvements. Today, we have CPUs with core count as high as 64.

Still, this was a new technology and using the full potential of the hardware requires software developers to work in new ways. It's like hiring a brand new assistant to help with doing chores. How do you split the work and how do you communicate efficiently? Regardless, this was a step in the right direction. In the following years, a few companies, namely Sony, Toshiba and IBM created an alliance called STI. Together, they built on top of their existing multi-core architecture and released the Cell Architecture.

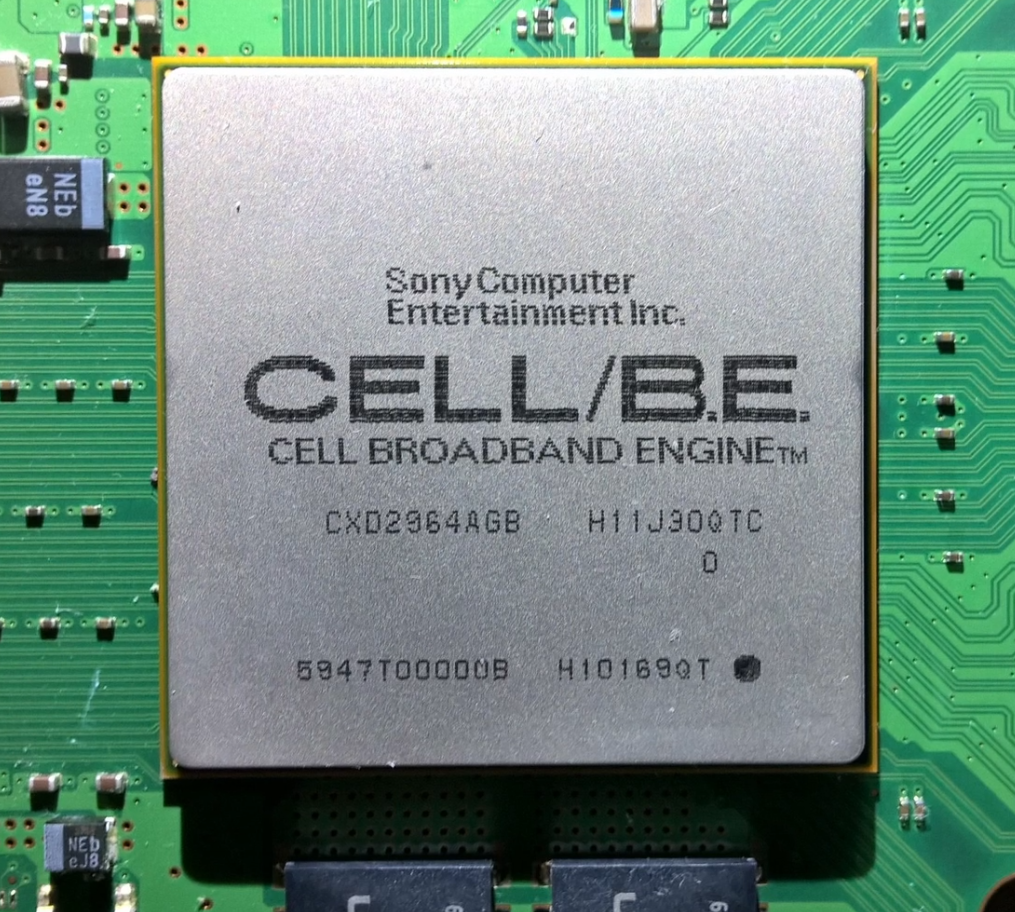

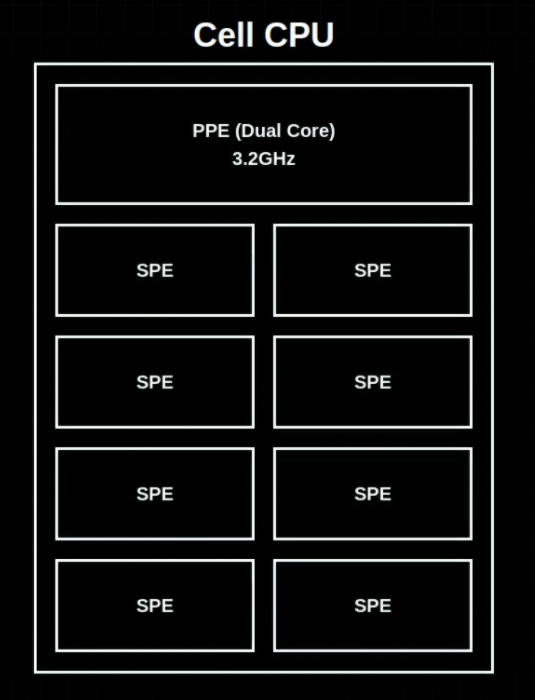

The Cell CPU

The Cell CPU was developed to be a powerhouse for number crunching in all sorts of fields. It continued on their focus of parallelization and packed a dual core processor and 8 extra smaller processors called SPE. While the included main processor wasn’t all that fast. Used well, they can achieve a very high performance number. Whereas today’s architecture is split between CPUs and GPUs, The Cell architecture was somewhere in between.

Cell in PS3

And the first major commercial use of this architecture is in, you probably guessed it, the PlayStation 3. Sony actually planned to ship the PS3 without a GPU module until the end of development when they realize that the Cell by itself probably wouldn’t be enough power.

Now, a game developer can utilize the Cell CPU in many different ways.

Cell CPU Workflow

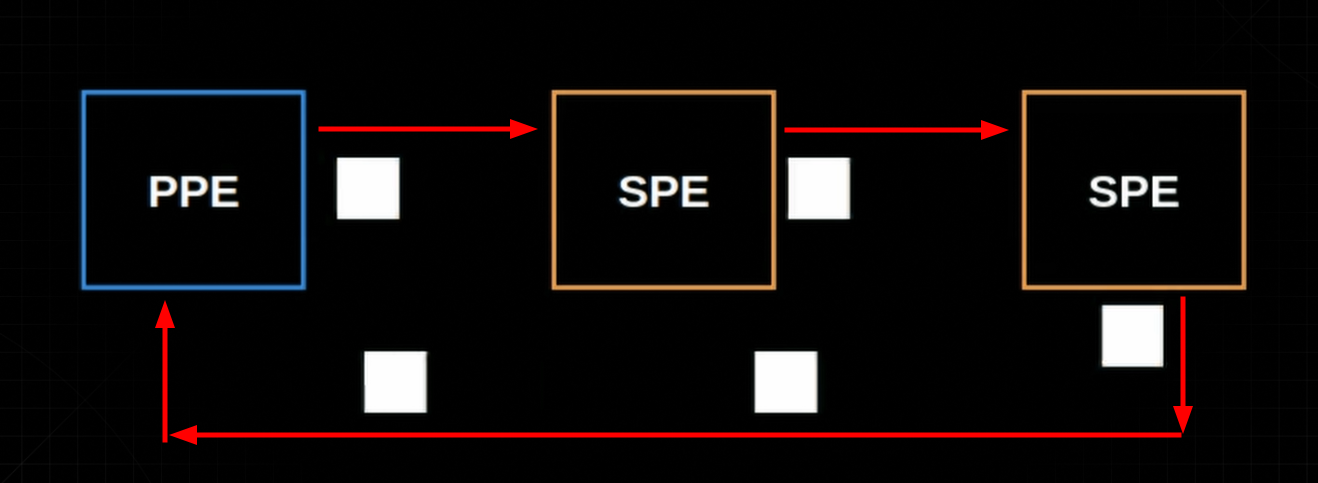

One way is to run processes in multiple stages where first, the PPE sends work to a single SPE. When it finishes its work, it will then pass the data along to the next SPE. This continues on and on until it’s done and returns the data back to the PPE.

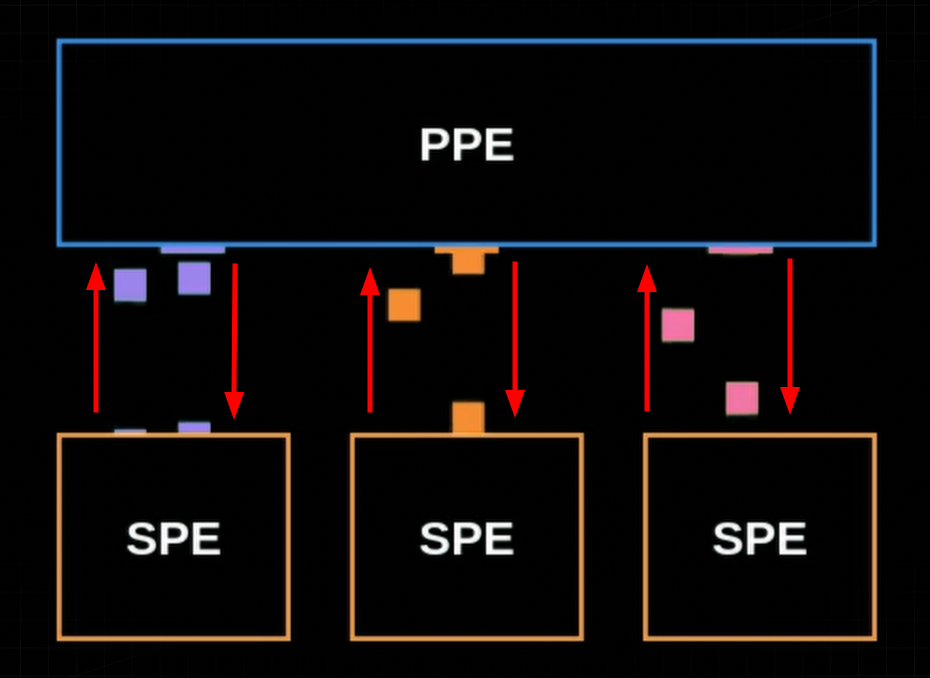

Another way is to do the work in parallel. The PPE first split up a task into multiple sub-tasks, and then it sends each of them to a different SPE. Once the SPEs finish their computation, they then send the data to the PPE which then combines all the output for the final result.

Performance Issue

Regardless of the method, by raw computing power, the PS3 had a lot of potential. It was an innovation that could lead the industry forward. There was nothing like it before. But at the time, many players were complaining about performance issues as compared to its competitor, the Xbox360. At first glance, this is odd. Because the PS3 had a theoretical maximum speed of 230 GFlops meanwhile, the Xbox360 only had a maximum speed of 77 GFlops. So what was the problem?

It turns out, utilizing these new processes required a lot of extra work. It was work that doesn’t necessarily make a game more fun, and also extra work that game developers didn’t have to do on other consoles.

A game that is originally made for Xbox could be ported to Windows without too many troubles. However, porting the same game to the PS3 without changing how the game works was not a trivial task. This meant that many games were simply ignoring the SPE part of the Cell CPU. And the PPE by itself, was significantly weaker than the CPU in the Xbox360.

Still, there is no denying that when used well, the PS3 could produce stunning graphics for its time.

Use In Scientific Field

Now remember how the Cell architecture is a design that sits in between a modern CPU and GPU? Well the types of computation that makes a processor good at dealing with graphics are similar to those used to compute scientific calculations. So researchers of different fields without a doubt saw the potential that this CPU had.

On January 3, 2007, Dr. Frank Mueller, Associate Professor of Computer Science at North Carolina State University, clustered a total 8 PS3s. At the cost of $5,000, he claimed that the cluster costs less than some desktop computers that have only a fraction of the computing power. This cluster was eventually used for research and to teach classes of students.

A few months after, Gaurav Khanna a professor in the Physics Department of the University of Massachusetts Dartmouth built a cluster of 8 PS3s which he named the PS3 Gravity Grid. This cluster is used to perform calculations to simulate the collision of two black holes.

And on May 2008, The Lab for Cryptological Algorithms broke the record of the Diffie-Hellman problem on elliptic curves by using a cluster of 200 consoles.

The Condor Cluster

And that brings us to the Condor Cluster. Perhaps the most well known story of the PS3 being used for scientific purposes. The Air Force Research Laboratory started experiments with connecting 8 machines. After seeing the results, they further increased the number to 336 machines which performed even better. The lab then asked for $2.5 million from the Department of Defense to build the machine.

In November 2010, they announced the machine built with a total of 1760 consoles. According to the lab, it would have costed 10 times as much to build an equivalent system without using the PS3s. Originally, The lab wanted to add more machines to the cluster. However, by the time budget was approved by the Department of Defense, it was getting harder to find PS3s. Especially the ones they need.

Removal of OtherOS

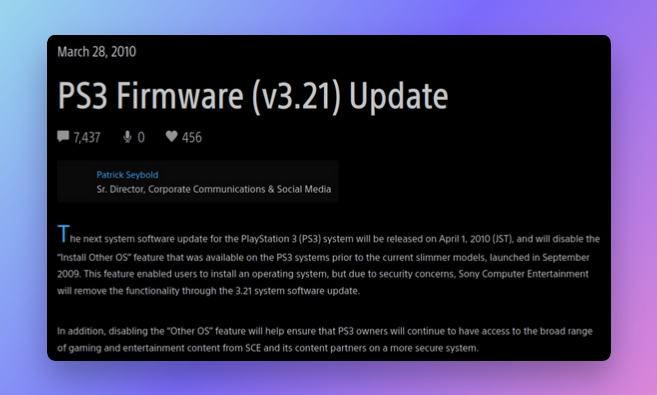

You see, On March 28, 2010, just a few months prior, Sony announced the removal of OtherOS, the feature that allows the PS3 to be used with an alternative operating system. This feature allowed developers to install Linux, allowing them to write and run any software they wanted. In that environment, developers weren’t able to access the GPU, however, all the SPE’s were fully functional. The removal of OtherOS meant that new PS3s that had the new updates applied weren’t usable for the supercomputer.

A cluster that has already been setup wouldn’t encounter any problems. Previous updates will keep working as it is. As long as they aren’t connected to the PSN, the console will not run any automatic updates. However, this makes replacing the individual consoles very difficult. Newer models are shipped with the new update. So the lab had to find an old model of the console that still have the old version installed.

Regardless, the cluster ran well and was on operation for about 5 years. Today, it doesn’t really make sense to use the PS3 anymore. With the improvements and standardization of the CPU and GPU, newer machines uses a very similar architecture. And the Cell architecture was discontinued not long after.

Aftermath

This is great for game developers, as it allows them to focus on what they want to do most, creating games.

And this story is similar for the scientific field. As GPUs become more mainstream, its commercialization drives price down, which allows researchers to get more power for less price. Most of the top supercomputers we see today use hardware very familiar to the ones that can be seen in the consumer market.

And so the saga of the game console supercomputer ends.

GPGPU

But wait, there’s more. Today, we are seeing exciting developments on GPGPU. That is, using the GPU to handle computation which were traditionally handled by the CPU.

On the opposite side, we are seeing development on the CPU to perform work that are typically done in the GPU. This is in the form of the SVE extension in ARM, or the Vector extension for RISC. As of today, the 2nd fastest supercomputer in the world uses the SVE extension of ARM architecture.

So perhaps PlayStation 3 was indeed far ahead of its time.

Did you know the animation in the youtube video was made using React. Read this post if you want to know more!

Michael is a full-stack developer and the founder of Recall and DbSchemaLibrary. He loves to make things and occasionally write articles like these.